Creating a game character from scratch by hand

.jpg)

The project's main idea is to draw a character based on the client's description for a casual game and then create a model using Stable Diffusion.

Gideon Fletcher — Master of Time

Gideon Fletcher, an ashen blond with piercing blue eyes, lives in the late 17th century, a time of great change and secret societies. His appearance always stands out in a crowd: tall boots, a blue velvet frock coat that highlights his noble heritage, and a perpetually restrained yet mysterious smile.

Gideon is the heir to an ancient lineage of alchemists and mages, with his family having guarded secrets for centuries that remain unknown to ordinary mortals even to this day. However, his most unique ability is the control of time. Gideon can slow down, speed up, or even momentarily stop time around him. This power allows him not only to evade dangers and traps but also to always stay one step ahead of his enemies.

A consistent character in Stable Diffusion based on the illustration.

To create a model of this drawn character in Stable Diffusion, we need a dataset with about 10-15 images of the face from different angles. Instead of drawing all 15 images from scratch, we can set up the necessary pipeline in Stable Diffusion by configuring the appropriate nodes, providing detailed descriptions for both the positive and negative prompts for the character, setting the weights, and using ControlNet to specify the desired head angles for generation.

To create a model of this drawn character in Stable Diffusion, we need a dataset with about 10-15 images of the face from different angles. Instead of drawing all 15 images from scratch, we can set up the necessary pipeline in Stable Diffusion by configuring the appropriate nodes, providing detailed descriptions for both the positive and negative prompts for the character, setting the weights, and using ControlNet to specify the desired head angles for generation.

Around 25 images were created for the dataset, including a full-body illustration of the character. All images were made in 1024x1024 resolution. Using ControlNet, different head angles were set. The dataset was then manually cleaned of artifacts in Photoshop

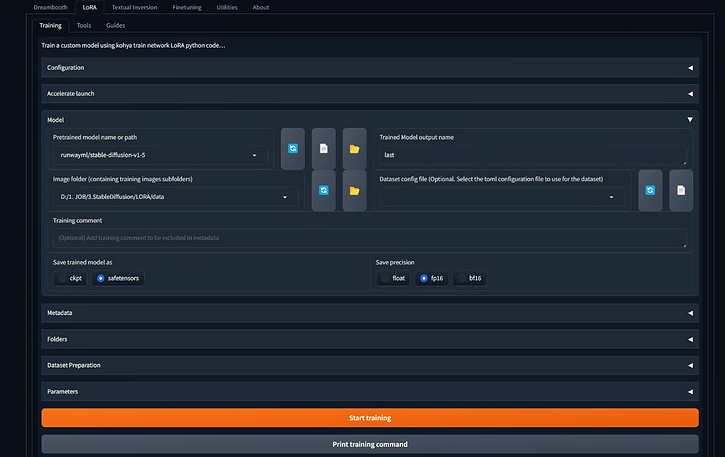

Afterward, the dataset is used to create a Lora model. Several hours are then needed for the model to be trained:

Once the model is trained, it becomes easy to generate the character in various poses and any locations. You just need to set up the correct pipeline in the nodes, define the positive and negative prompts, adjust all the settings, and then you can generate anything you want

Checking the results generated by the trained model

.png)

Creating a facial animation rig for a character from a single image in Stable Diffusion

Using nodes, you can set the desired facial emotion of the character by moving individual parts of the face. Thanks to David_Davant_Systems for sharing the workflow.

Farm Projects

This project is a creative video concept development that brings to life the warmth and charm of farm life. I developed a 40–50-second video that tells a cozy story about five characters: a young couple, their son, and their grandparents. The farm is brought to life with animals such as cows, alpacas, and chickens. All the illustrations were initially generated using Stable Diffusion and refined in Photoshop to achieve the highest quality and detail. This project combines cutting-edge image-generation technology with a creative approach to convey the heartfelt atmosphere of farm life.

Video creative

Any CTA in any style

Ads Banners for Google and Facebook

Make any changes to the image

Create any hair color in ComfyUI with a single click and prompt.

Create any character in ComfyUI with a single click and right prompt.

In ComfyUI is possible expand any boundaries and add new heroes or fix something

Short video from picture